The following problem was asked in the Art of Problem Solving forum; here you can see the question (post #4) and my answer (post #5).

Remark. In steps 2), 4), and 5) of the solution of the following Problem, we have used this fact that any bounded sequence of real numbers that is either increasing or decreasing, is convergent (see the Fact in this post!).

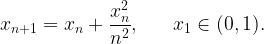

Problem. Consider the sequence  defined by

defined by

Show that  is convergent.

is convergent.

Solution. I’m going to give the solution in several steps.

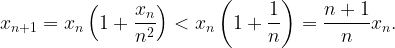

1) The sequence  is decreasing.

is decreasing.

Proof. It’s clear that  and, by induction,

and, by induction,  Thus

Thus

2)

Proof. By 1),  and so

and so  exists and

exists and  Now, by Stolz-Cesaro,

Now, by Stolz-Cesaro,

and so

3) The sequence ![\displaystyle \frac{x_n}{\sqrt[3]{n}}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cfrac%7Bx_n%7D%7B%5Csqrt%5B3%5D%7Bn%7D%7D&bg=ffffff&fg=000000&s=1&c=20201002) is eventually decreasing.

is eventually decreasing.

Proof. By 2), there exists an  such that

such that  for all

for all  It is easy to see that

It is easy to see that ![\displaystyle \frac{\sqrt[3]{1+x}-1}{x} > \frac{1}{4}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cfrac%7B%5Csqrt%5B3%5D%7B1%2Bx%7D-1%7D%7Bx%7D+%3E+%5Cfrac%7B1%7D%7B4%7D&bg=ffffff&fg=000000&s=1&c=20201002) for all

for all  and so, for

and so, for  we have

we have

![\displaystyle x_n < \frac{n}{4} < n^2\left(\sqrt[3]{1+\frac{1}{n}}-1\right),](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+x_n+%3C+%5Cfrac%7Bn%7D%7B4%7D+%3C+n%5E2%5Cleft%28%5Csqrt%5B3%5D%7B1%2B%5Cfrac%7B1%7D%7Bn%7D%7D-1%5Cright%29%2C&bg=ffffff&fg=000000&s=1&c=20201002)

which then gives

![\displaystyle \frac{x_{n+1}}{\sqrt[3]{n+1}}=\frac{x_n}{\sqrt[3]{n+1}}+\frac{x_n^2}{n^2\sqrt[3]{n+1}} < \frac{x_n}{\sqrt[3]{n}}.](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%5Cfrac%7Bx_%7Bn%2B1%7D%7D%7B%5Csqrt%5B3%5D%7Bn%2B1%7D%7D%3D%5Cfrac%7Bx_n%7D%7B%5Csqrt%5B3%5D%7Bn%2B1%7D%7D%2B%5Cfrac%7Bx_n%5E2%7D%7Bn%5E2%5Csqrt%5B3%5D%7Bn%2B1%7D%7D+%3C+%5Cfrac%7Bx_n%7D%7B%5Csqrt%5B3%5D%7Bn%7D%7D.&bg=ffffff&fg=000000&s=1&c=20201002)

4) ![\displaystyle b:=\lim_{n\to\infty}\frac{x_n}{\sqrt[3]{n}}=0.](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+b%3A%3D%5Clim_%7Bn%5Cto%5Cinfty%7D%5Cfrac%7Bx_n%7D%7B%5Csqrt%5B3%5D%7Bn%7D%7D%3D0.&bg=ffffff&fg=000000&s=1&c=20201002)

Proof. By 3),  exists (as a finite number), and so, by Stolz-Cesaro,

exists (as a finite number), and so, by Stolz-Cesaro,

![\displaystyle b=\lim_{n\to\infty}\frac{x_{n+1}-x_n}{\sqrt[3]{n+1}-\sqrt[3]{n}}=\lim_{n\to\infty}\frac{x_n^2}{n^2(\sqrt[3]{n+1}-\sqrt[3]{n})}=3\lim_{n\to\infty}\frac{\sqrt[3]{n^2}x_n^2}{n^2}](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+b%3D%5Clim_%7Bn%5Cto%5Cinfty%7D%5Cfrac%7Bx_%7Bn%2B1%7D-x_n%7D%7B%5Csqrt%5B3%5D%7Bn%2B1%7D-%5Csqrt%5B3%5D%7Bn%7D%7D%3D%5Clim_%7Bn%5Cto%5Cinfty%7D%5Cfrac%7Bx_n%5E2%7D%7Bn%5E2%28%5Csqrt%5B3%5D%7Bn%2B1%7D-%5Csqrt%5B3%5D%7Bn%7D%29%7D%3D3%5Clim_%7Bn%5Cto%5Cinfty%7D%5Cfrac%7B%5Csqrt%5B3%5D%7Bn%5E2%7Dx_n%5E2%7D%7Bn%5E2%7D&bg=ffffff&fg=000000&s=1&c=20201002)

![\displaystyle =3\lim_{n\to\infty}\frac{x_n^2}{n\sqrt[3]{n}}=3 b\lim_{n\to\infty}\frac{x_n}{n}=0, \ \ \ \ \ \ \ \text{by 2)}.](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+%3D3%5Clim_%7Bn%5Cto%5Cinfty%7D%5Cfrac%7Bx_n%5E2%7D%7Bn%5Csqrt%5B3%5D%7Bn%7D%7D%3D3+b%5Clim_%7Bn%5Cto%5Cinfty%7D%5Cfrac%7Bx_n%7D%7Bn%7D%3D0%2C+%5C+%5C+%5C+%5C+%5C+%5C+%5C+%5Ctext%7Bby+2%29%7D.&bg=ffffff&fg=000000&s=1&c=20201002)

5) The sequence  is convergent.

is convergent.

Proof. By 4), there exists an  such that

such that ![x_n < \sqrt[3]{n}](https://s0.wp.com/latex.php?latex=x_n+%3C+%5Csqrt%5B3%5D%7Bn%7D&bg=ffffff&fg=000000&s=1&c=20201002) for all

for all  and so

and so

![\displaystyle x_{n+1}-x_n=\frac{x_n^2}{n^2} < \frac{\sqrt[3]{n^2}}{n^2}=\frac{1}{n\sqrt[3]{n}}.](https://s0.wp.com/latex.php?latex=%5Cdisplaystyle+x_%7Bn%2B1%7D-x_n%3D%5Cfrac%7Bx_n%5E2%7D%7Bn%5E2%7D+%3C+%5Cfrac%7B%5Csqrt%5B3%5D%7Bn%5E2%7D%7D%7Bn%5E2%7D%3D%5Cfrac%7B1%7D%7Bn%5Csqrt%5B3%5D%7Bn%7D%7D.&bg=ffffff&fg=000000&s=1&c=20201002)

Hence  is bounded, because the series

is bounded, because the series ![\sum \frac{1}{n\sqrt[3]{n}}](https://s0.wp.com/latex.php?latex=%5Csum+%5Cfrac%7B1%7D%7Bn%5Csqrt%5B3%5D%7Bn%7D%7D&bg=ffffff&fg=000000&s=1&c=20201002) converges, and so the sequence

converges, and so the sequence  converges because it is increasing.

converges because it is increasing.

Exercise. Regarding step 5) of the solution, explain why the fact that the series ![\sum \frac{1}{n\sqrt[3]{n}}](https://s0.wp.com/latex.php?latex=%5Csum+%5Cfrac%7B1%7D%7Bn%5Csqrt%5B3%5D%7Bn%7D%7D&bg=ffffff&fg=000000&s=1&c=20201002) converges implies that the sequence

converges implies that the sequence  is bounded.

is bounded.

in the following problem is known as Ahmed’s integral. I have no idea why the integral proposed by Zafar Ahmed became so ridiculously popular; there are many integrals which are much harder and nicer yet never as popular as this integral. Anyway, here it is.